This post will look at what security options OpenAPI gives us, what companies big and small are actually doing, and what you should be doing when you build your own API.

- Maybe not Hacker News, but they have a special deal with Firebase to give people unrestricted access to the firehose. I put together a quick Hacker News OpenAPI spec for some testing

Security in the OpenAPI Spec

If you’re like me and you really dig formatting languages, you’re in for a good time because the OpenAPI Specification v3.1.0 is a treat. But if you don’t plan to add this to your nightstand for bed-time reading, this section will walk you through how security is defined.

There are five types of security, and three places to define it.

Five Types of Security in OpenAPI

In a Swagger 2.0 you had three types. OpenAPI dropped one and added three.

The five types in OpenAPI are: apiKey, http, oauth2, mutualTLS, and openIdConnect. The first three are the most commonly seen, and we’ll talk about them a lot in this post. The last two are new, and are rarely seen in the wild.

(The original three types in Swagger 2.0 were basic, apiKey, and oauth2. You can still define a basic authentication with OpenAPI, using type: http; scheme: basic, but that is not recommended for reasons we’ll talk about later.)

Where Security is defined in OpenAPI

There are three places to place security in an API spec.

Under #/components/securitySchemes

This is where you define your security options.

(Simpler APIs will normally only have one option.)

The key name is whatever you want, you’ll use it to reference this object from elsewhere in the spec. The only required parameter is type, which should be one of the five types we talked about above. The other parameters change depending on the type.

Here’s an example of an API that has two security schemes.

components:

securitySchemes:

myBearerAuth:

type: http

scheme: bearer

bearerFormat: jwt

myApiKey:

type: apiKey

name: key_name

in: header

Interesting side note: The securitySchemes in components is a bit of an odd duck. Most of the time, components is for reusable objects. If you find yourself writing an object again and again, you can put it in components, and just reference that component. But with security, the securitySchemes in components is the only place to define security; it has to be defined here.

Under #/security

This is the default security requirement to use for the whole API. This should match up to a named security scheme that was (or will be) defined in #/components/securitySchemes.

If this top level security object is missing, or if it’s an empty object, then this API will have no security by default. You can see this in a lot of smaller APIs that have a few open endpoints for everyone, but then define operation-specific security for the rest.

Here’s an example:

You might be wondering why there’s an empty array here. Good wondering! This array is for scopes, which are strings that let you define fine-grained security permissions. Often it’ll look like “read:foo” and “write:foo”. Oauth2, and it’s newer cousin openIdConnect, always take scopes, other security types may not.

This is a key/value pair, and the value is always an array. If you don’t have any scopes, then you have to have an empty array.

Under a specific operation

Just like above, we use one of the defined security schemes, but this time only for a specific operation on a specific path.

If there is no security defined for an operation, then the API will use the top-level default under #/security.

In this example, we use myBearerAuth for anyone who wants to get /users.

paths:

/users:

get:

security:

- myBearerAuth:

- 'read:users'

- 'public'

Here we’re using scopes. The user of the SDK has to have either a “read:users” or a “public” scope.

And that’s the last we’ll say about scopes.

Security in Practice

In practice, when hitting an endpoint, the security is sent out with the request using one of three methods. One of these is terrible, see if you can spot it.

- Bearer — A token in the header, which is how OAuth is used. It looks like

Authorization: Bearer tokenValue

- API Key — A key/value pair that could be in the header, query, or even in a cookie, but the best practice is to put it in the header, and often looks like

Authorization: apiKey apiKeyValue

- Basic — A username/password in the header, in the clear. It looks like

Authorization: Basic username:password.

Did you spot the terrible method? That’s right, it’s Basic. Don’t send around a username and password with every API call, it’s insecure. We’ll talk about recommendations later.

What API security are big companies using?

The big companies rarely have official and easy-to-find OpenAPI specs, so this information was mostly gleaned from their documentation portals.

Facebook uses OAuth2

Dropbox uses OAuth2

Twitter uses OAuth and OAuth2

GitHub uses OAuth2, and sometimes Basic Authentication, which is worrisome.

Microsoft Azure uses OAuth2, and recommends building your own apps similarly:

The results are unsurprisingly unanimous: big companies use OAuth2.

What API security are other companies using?

Getting info on lot of other companies is more difficult. Either we have to dive deeply into thousands of API documentation portals, or we can run some simple statistics on a collection of specs. This post will go the latter route.

Methodology

To do this, I’m turning to my favorite JSON Query tool: jq! Although in reality many specs are written in YAML, so we’ll have to turn to its YAML wrapper: yq!

Here’s the basic command.

yq '.components.securitySchemes' openapi.yaml

A good source of a OpenAPI specs is the OpenAPI Directory. It’s a well-maintained resource filled with thousands of specs. We’ll run some statistics on those.

To get some simple stats, I ran a series of UNIX commands on the spec in the OpenAPI Directory. They’re rough, but they work well enough to build our instincts. You can follow along at home.

# total number of files

find . -name "*.yaml" -print | wc -l

3771

# lets save some output, to look at it twice

find . -name "*.yaml" -print0 | /

xargs -0 -n24 -P4 yq '.components.securitySchemes' /

> out 2>/dev/null

# number of APIS without a securityScheme

grep ^null out | wc -l

2452

# ranked list

cat out | grep '"type":' | cut -d\" -f 4 | sort | uniq -c | sort -rn

980 apiKey

853 oauth2

177 http

2 openIdConnect

Now there’s one problem I know of right off the bat: these statistics are overwhelmed by a small number of companies with a lot of specs. Azure alone has 653 specs, each with multiple versions, for a total of 1,832 files in their subsection of this repo. It’s a classic power law distribution.

We’ll run this all again, but this time excluding the five largest offenders, so we only look companies with a smaller number of specs. There is still more cleaning up we could do, but this gets us most of the way to what we want.

(The actual Unix commands for this part are left as an exercise to the reader, mine were pretty messy.)

Here are the new results.

# number of APIS without a securityScheme

499

# ranked list

245 apiKey

123 http

88 oauth2

2 openIdConnect

Conclusions

Now this is interesting, but not too surprising.

OAuth2 is strong choice for authentication, but it’s also a difficult and complex framework; the IETF working group lost a key editor over that fact, and even people who spend a lot of time in the OAuth2 world will sometimes recommend against it. Conversely apiKey can be handled with simple home brewed solutions.

The http type is a more complex question. Everything depends on what comes next, in the scheme parameter. This parameter is required for http types, and has to be a registered scheme.

I did some quick manual checking of APIs with an http type, and I found a fairly even mix of two schemes: ****

- “scheme: bearer” - This is passing a token in the headers. Often this is defined as a JWT token, which is a very modern and secure method.

- “scheme: basic” - This is passing the username and password in the headers, and is basically insecure.OpenAPI specs that use this were sometimes using it as an option alongside other security schemes, but it was still unfortunate to see it at all.

Conclusions part 2, when security is missing

I did learn one big thing: at the beginning of this post I said, “Every API has security,” and now I know that many APIs do not, in fact, have security. If I included the “no security” in the pie chart it would have been 52% of the total.

To understand this problem more, I did some spot checks of specs without security.

- Many were small projects, and they possibly were never meant to be used. I’m suspicious anytime I see a spec that was committed to GitHub with version 1.0.0 and then never touched again.

- Some specs were written by enthusiasts for an existing but un-specced API, and it’s possible they just didn’t write a complete spec.

- I saw one API that did have token-based security, but was only mentioned in the examples.

- Amazon has their AWS OpenAPI vendor extensions, and specs that use them may not show up in my methodology.

- There were a few specs that were autogenerated from other places, like protobufs, and it’s likely that something was lost in translation.

So what should you do?

Use OAuth2 if you can, apiKey if you want.

That’s it. That’s the recommendation.

For more details, keep reading. But you can stop here and be happy with what you’ve learned.

apiKey

In spite of having the word API in it, apiKey is technically no longer the best practice for APIs. Phil Karlton said that naming thing is one of the two hardest things in computer science, and this small irony is more proof of that.

But despite what the best practice people say, whoever they are, API keys are still a commonly-used method of security, and perfectly fine for many small APIs.

If you want to keep using your API key security make sure it’s submitted in the headers and not in the query string, that way they’ll be less likely to leak. (If you’ve been using GitHub API, you may have noticed they deprecated query tokens last year, after a series of deliberate “brown-outs” to get people off their butts.)

But even here there are exceptions. Cisco uses apiKey in a cookie that is obtained from a Single-Sign-On assertion.

Basic

Don’t use basic authentication.

Don’t submit the user’s username and password in the clear.

If you still want to keep using your Basic authentication: don’t.

Even the RFS that defined Basic Authentication says don’t.

The Basic authentication scheme is not a secure method of user authentication…

— RFC 2617: HTTP Authentication: Basic and Digest Access Authentication

OAuth2

OAuth2 is where you probably want to be, but it has some gotchas, and its best practices have evolved over time. A few notes to be aware of are:

Now what?

If you’ve come this far, then you may have excitedly built something like this in your OpenAPI spec.

{

"type": "oauth2",

"flows": {

"authorizationCode": {

"authorizationUrl": "https://example.com/api/oauth/dialog",

"tokenUrl": "https://example.com/api/oauth/token",

"scopes": {

"write:pets": "modify pets in your account",

"read:pets": "read your pets"

}

}

}

}

Your customers are happy to finally get some solid security, but then they ask you how to use it in their code. You spent all this time carefully implementing good security in the server, and now you have to implement the other end of it in an SDK?

That is a lot of hard, difficult work.

If only there was a better solution.

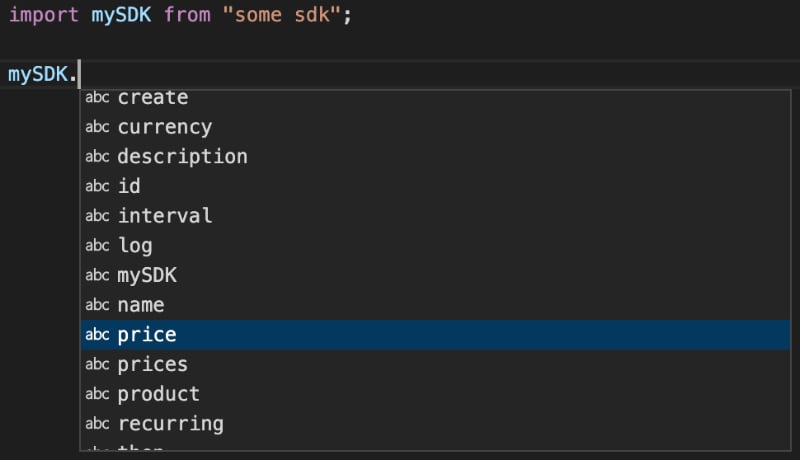

Use liblab!

Use liblab!

Give us your API, and we’ll generate SDKs with best practices built in. We won’t even generate an SDK that will put the user at risk, we’ll fail with friendly error messages and recommendations on how to fix it. We’ll make sure your security choices will never become someone else’s postmortem.

We even produce documentation for your API, so your users will know exactly how your security policies work.

liblab!